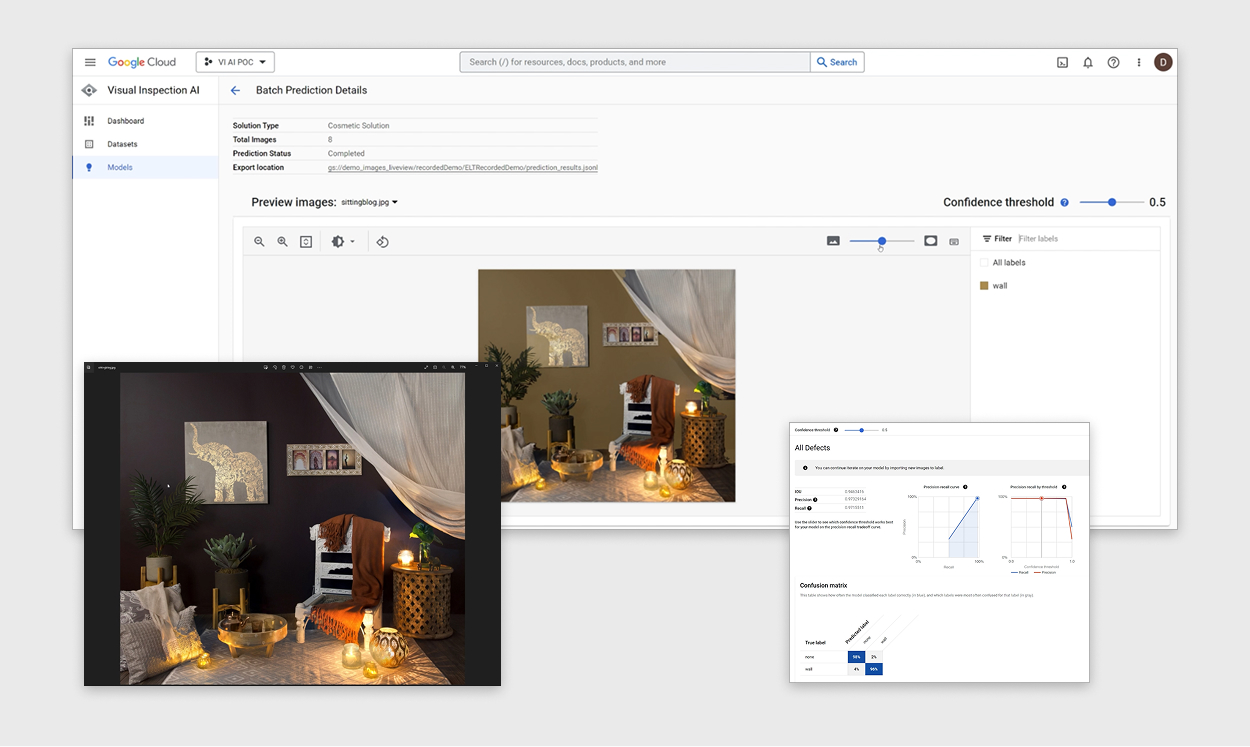

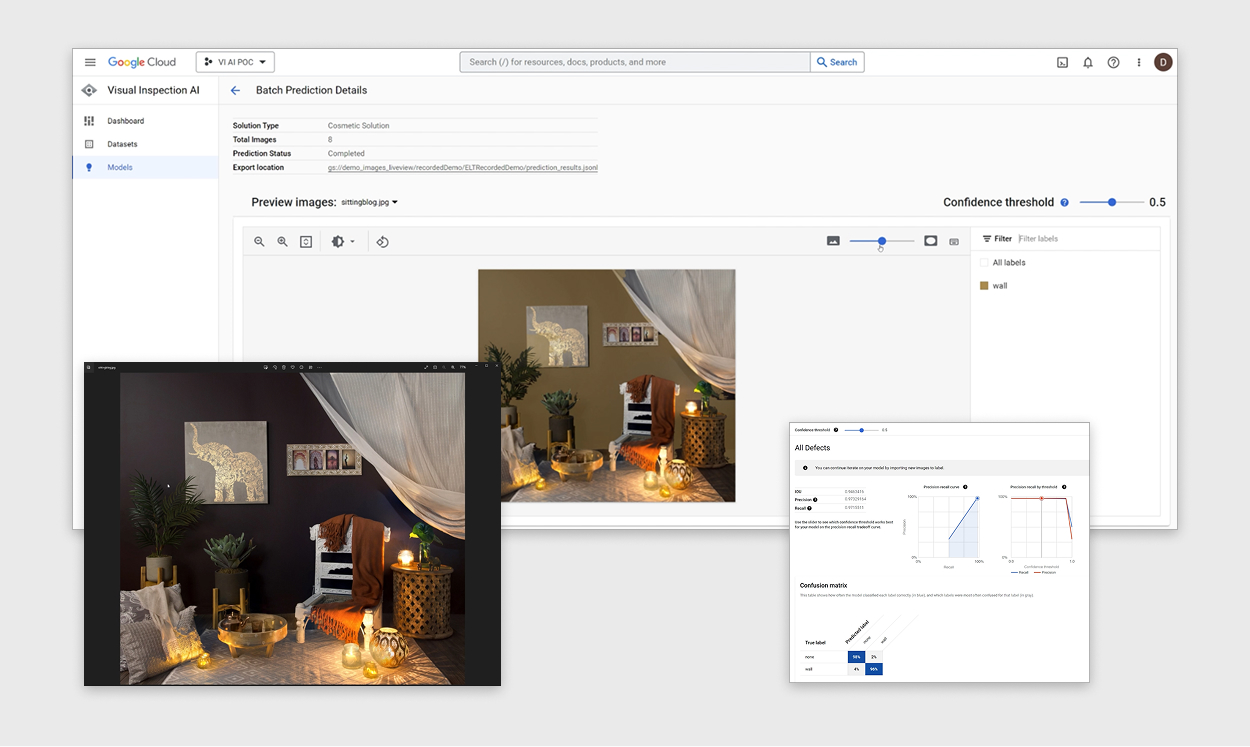

Around 2022–23, I collaborated with Google’s Cloud Vision AI development team on model‑training efforts supporting several BEHR digital visualization initiatives. The goal was to train a model capable of identifying paintable surfaces in user‑uploaded photos, many of which were low‑resolution, poorly lit, or captured on older devices. To support this, we curated twelve years of user‑generated content from BEHR’s Paint Your Place platform and created multiple batches of segmentation masks focused on detecting wall borders and other paintable regions.

Google provided access to their training environment and guided us through the fundamentals, then gave our team the freedom to experiment with different masking approaches and data strategies. Observing how each training cycle improved or regressed in accuracy was an invaluable learning experience and deepened my understanding of how computer‑vision models interpret real‑world imagery. While my role centered on creative problem‑solving, dataset preparation, and cross‑team collaboration, the project offered meaningful hands‑on exposure to Cloud Vision AI workflows that later informed my work on BEHR’s next generation of visualization tools.